This looks pretty amazing!

This is an article from 2016. Guess it didn’t take off…

I agree, neither AMD nor Nvidia care about VR related hardware needs. The opposite is true. As they all constantly push the myth of the death of 3D and VR to have a reason not to spend resources into the MR/VR needs. So I trust in Chinese tech companies to finally ramp up something not only for military purpose  but for business needs. Offices will partially go VR, I believe. So adequate hardware resources are required. PIMAX is a big step in that direction.

but for business needs. Offices will partially go VR, I believe. So adequate hardware resources are required. PIMAX is a big step in that direction.

If this is off the table I wonder why Pimax is one of the hardware partners involved in this project.

Oh, they just fake real interest and involvement. IMHO it´s just marketing for me. Yes they spend a little in resources and provide a little, but just below the minimum. Even mention tiny companies like PIMAX as hardware partner means little to them. If they really would be interested in a suitable VR consumer solution, I guess, the SLI solution would have been pushed forward instead of pushed out, because currently seems the only way to deliver binocular 3D vision with sufficient amount of pixel per eye (combined with good FoV) and acceptable framerate. Even a 3090 has limits. They sell to miners, big deals, so why care about us?

VRSS that´s a driver trick, just another variant, comparable to foveated rendering.

It add no quality but cares about existing resourcesm, which I value, to be honest.

Your argument is just a distraction from Nvidia (as well as AMD) they do not care about real VR requirements.

Which means SLI/Crossfire needs urgent revival to serve two true HighRes displays (and maybe we need 2 X DP2.0) There is a reason for the apple rumor about an own headset. People would buy promised tech.

Apple with 2 x 8k displays?, my 8KX put my EVGA 3090 FTW3 Ultra Hybrid OC to its knee, even paired with 10980XE @ 5.0. Let’s hope one of these days something good will show up.

Exactly.

I have a ZOTAC 3090 and lucky with, to have even the worst of 3090 version (!) with an AMD 5950 to run my 8KX. Would like to have DP2.0 and SLI working for standard apps. You need the power for a pair of such displays. Then Apple: If true, one need to have quad-SLI of RTX 3090 to run such a device.

AMD currently is just not relevant (unfortunately).

I fear, Apple is a bluff, only. Which is sad, because competition drives development.

I think it’s more of a forward planning move that this doesn’t get much love. Considering that all the data from card a gets replicated to card b, your requiring insane bandwidth and looking forward vr hmd’s are likely to only increase frame rate to closer match reality.

apples 8k device will be like 70 FOV or something you’d see from a microled.

there isn’t 8k screens yet besides microled, so think Panasonic VR. There was a rumour of apple working on a 16k VR headset but no details of display type, and all the patents filed by Apple VR are for microleds.

It will probably do 60-90hz and with foveated rendering.

Once again, higher resolution, requires less GPU. We will definitely get better results running a ‘Pimax Vision 16kX’ equivalent at 0.75x TotalSR, rather than a ‘Pimax Vision 8kX’ at 1.5x TotalSR.

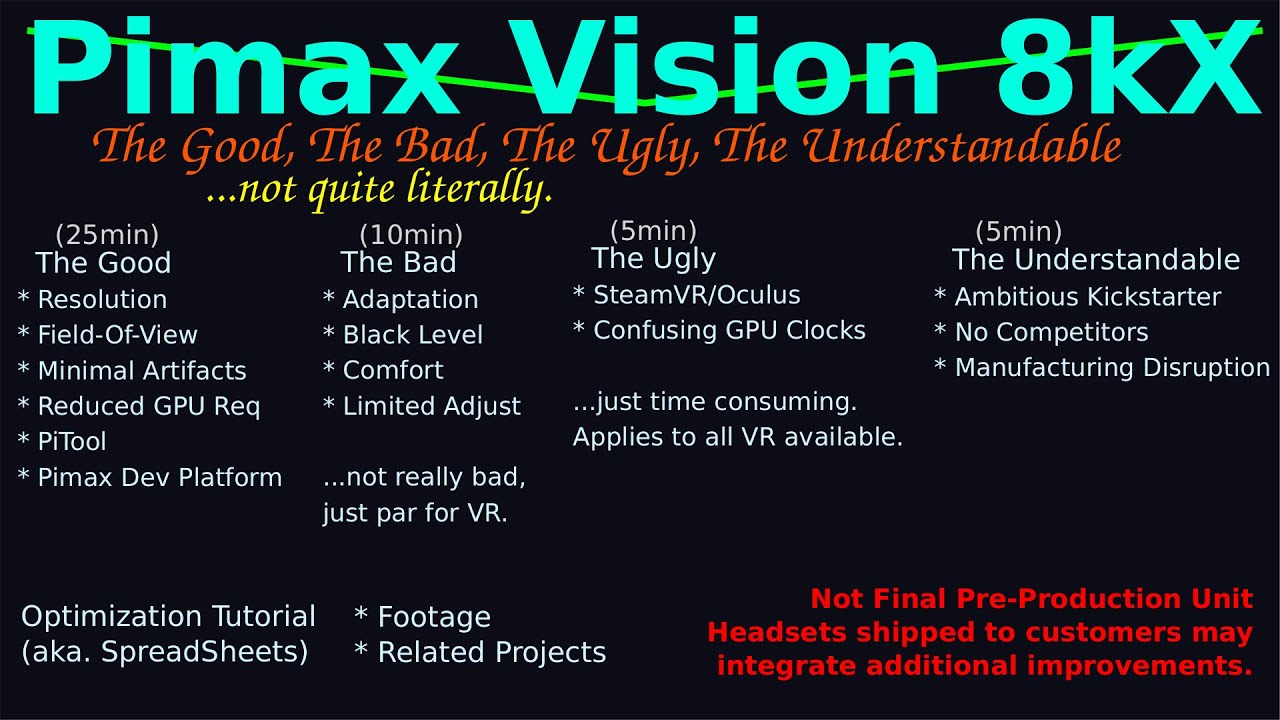

Actually, the Pimax Vision 8kX already works rather well. I wish more that we could have better usability to get more people into high-end VR. Like more IPD range, enough GPU power to turn off smart smoothing entirely, and maybe even the local ‘GeekSquad’ techs including people who actually know how to fix this stuff for people.

"Once again, higher resolution, requires less GPU."

Really? I assumed higher resolution per display requires more computing power. What exactly do I miss here? Is there some magic computing power around? The magic cloud perhaps?

How does that fit with “enough GPU power to turn off smart smoothing entirely…”?

Please enlighten me.

Maybe the SLI solution requires a new architecture and fresh thinking, perhaps with more autonomy per main compute unit to reduce the SLI overhead.

Supersampling does not produce as sharp an image, as a higher resolution display. More real pixels, less need for ‘fake’ pixels.

If NVIDIA would just give us a card with two chips glued to an interposer or something, then we could turn off Smart Smoothing, having twice the performance. I am still disappointed at how many years NVIDIA has held back on this performance milestone.

I never mentioned supersampling. All these tricks try to fake quality and reduce computing power.

But: I agree, dual chip architecture may be the solution. A “2X3090” would allow perhaps 2 * 4K with 180hz perhaps 2 * 6K with 144Hz but I doubt 2 * 8K with 144. (without supersampling)

They don’t have any incentive to compete with themselves. Even if they were to feel a threat of competition, they would only leak just enough of the “downed alien ship’s” tech to stay ahead. ![]()

Give us too much all at once and people would upgrade less often.

Exactly all of this. Instead of taking my money now (as a high-end GPU purchaser), they just want more certainty of taking everyone’s money some day in the future. Constant cash flow. Growing a market. Regular subscription pricing. Planned obsolescence. Seems to be the thinking.

At least now I am working on software of my own that should be able to do some multi-GPU things…

This topic was automatically closed 60 days after the last reply. New replies are no longer allowed.