DLSS3 could be an absolute game changer for VR when applied to motion smoothing.

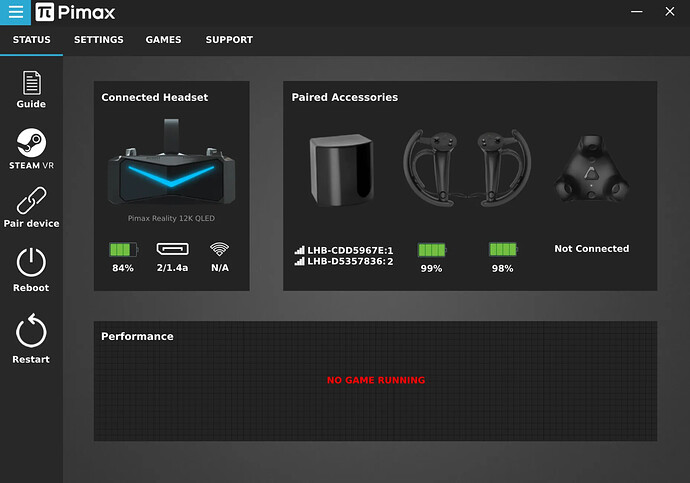

I must have gotten that one wrong then, I was convinced the 12K was announced with a single cable.

Two DP1.4 cables plus DSC plus foveated transport should suffice in terms of bandwidth.

I want to see that tied to a 4090 NOW

I also want to meet Tobii. Sounds like a nice guy.

I mean, it literally shows both DP2.0 and 1.4

So I’d assume Pimax does have a bit riding on that IO…

BTW, where is that mockup from? Is it new?

It’s fake. It’s not official.

No its over a month old. I think it was just a leak that was inadvertant from the translation commhnity outreach, and I take it to mean 2x dp1.4a. Also, Kevin stated that although his sample did not have DP1.4x2 in May, Martin’s did. They clearly undestand the importance of full-use of the bandwidth as they are pushing 120hz in the DMAS, inviting Tobii on board. Pimax certainly has a handle on the fact that bandwidth is limiting PCVR, so I’m not at all worried that they’re missing the point. Making it work is obviously their challenge, and I can see they are rising to it. The fact thay they are working with WiGig at also says they understand its imprtance too. You couldn’t make 4k displays 10 years ago with two cables, so this is a somewhat natural evolution.

Did you find timmy ?

The thing I’m wondering about is whether the 4090 will be compatible with the 8KX.

The 3090 Ti had a problem which was not encountered for other nVidia 30 series models where it wouldn’t interface with the 8KX over the displayport connection. My understanding is that the 3090 Ti is unique in that it uses some of the 40 series hardware… essentially a half way mixture of 30 series and 40 series. It’s not like the other 30 series cards, and this is likely why this problem occurred only for the 3090 Ti.

That suggests the possibility that the entire 40 series lineup will also have the same problem interfacing with the 8KX.

Has the interface problem between the 3090 Ti and the 8KX been resolved?

Will 40 series cards have the same problem?

Will the Crystal and 12K also be affected by this issue?

Last I heard, the only workaround was using the Lindy repeater.

Hope not but I’ll be the first to complain if it doesnt work.

This GPU generation sucks eggs. They want you to pay $900 for a 70 class GPU that sells itself as a 4080, and have a new DLSS that gives you fake frames via motion vectors (with onboard silicon ) which will have latency penalties in any online application.

DLSS3 also will have sporadic support, just like DLSS2 has, because regular gamers and developers haven’t even made the jump to Ampere yet. Out of order shader execution is interesting, but this generation is a lemon in terms of value for money.

Nvidia deserves to lose money after getting fat off of Crypto profits.

I have to agree the price for the 4080 (or the disguised 4070) is very high, but people will pay for it given the idea that they don’t want to go without the new features. Ray tracing was the same way when it came out. People definitely thought it was a $300 dollar feature. It wasn’t of course. On the other hand there is a much larger base for DLSS than there was for ray tracing. The list includes the top games PLUS Unity and UE4 and UE5 support! I do hope it turns out that it’s worth the price and that DLSS is the game changer that they advertise.

If Ati (which we can not use) brings the new gen to reasanoble prices, i am sure nvidia will lose a lot of money due the lack of interest in their customers.And if they hit the ground, it is their own fault.As for me who bought nvivida for about 22 years now, i may get one but i have to be sure that it runs on my pimax.

I am a vr only user with a display emulator…almost anytime.(without bug shooting)

These Nvidia GPU prices are out of control. I paid $1000 Australian for a brand new 980ti when it released. The chip shortage, miners and scalpers have affected the now asking prices. This is the perfect opportunity for AMD to make more margin and hopefully they’ll offer GPUs that have power efficiency with high performance at a reasonable price, we’ll know that soon enough. I’m guessing AMD will probably price theirs $50-$100 less. Hopefully the 40 series will drop in price eventually like the 30 series have prior to the next gen being announced which will be 50 series.

Hope PIMAX can work with AMD and Nvidia to iron out the compatibility issues. Most Youtubers are complaining about the 4080 12GB should be 4070 and the high asking prices. https://youtu.be/WrzgZaonmTc

So correct me with I’m wrong here, but isn’t DLSS 3 frame generation applying to pancake, what is VR gamers have been using called space warp or Brain warp with the exception that it’s using AI to do it which should arguably be better?

DLSS 3 seems like it was practically made for VR!

EDIT: I’ve just had more time to think about this.

So the real crazy part here is, you know how some games motion smoothing looks like there are artifacts? AI solves this problem ! For DLSS3 supported games, we could just turn BrainWarp off and rely on DLSS ? to do the smoothing ? Maybe its not that simple, because VR requires adding motion prediction into the equation etc.

Still no doubt these VR mods like Luke Ross will benefit from the DLSS3 interpolation (eg. cyberpunk is getting it) So we might even be able to enable Ray tracing in VR now.

I wonder if this is something that could be applied generically at the driver level to replace motion smoothing.

I was thinking the excat same thing when DLSS 3 was announced but at least for now, VR support is not happening anywhere close to launch of the new GPUs wrt to DLSS 3.

From what I gather, latency becomes an issue with DLSS 3 and is mitigated by Reflex, which has been around for a while and afaik doesn’t support VR.

this is true latency will be a thing.

But i will try cyberpunk , may work with the 4090.This should be very good to test this.

wich mod is better btw ? lukes or vorpX ? i do own both…

To get back to it, i think we will se more in a few days, lets hope the preorder will not be as tragic.

The way I see it, the 4090 is 75% better at pure rasterization than the 3090.

Assuming a few things:

1. DLSS3.0 doesn’t work with VR (we’ll see):

2. You’re not CPU-bound:

3. Your game doesn’t use ray tracing

Pick ONE per VR game:

- 37.5 FPS —> 60FPS

- 75Hz —> 120 Hz

- 20PPD —> 25PPD.

“That’ll be $1600 sir.”

None of the above things are going to be earth-shattering, but they are damn nice.

Do you play a lot of 2D games as well?

Do you play demanding VR games like MSFS, DCS?

Do you have a good CPU?

Do you have an 8KX to pair with it?

Do you have $1600 lying around?

Are you planning on getting a 12K?

I’d love other reasons if you can think of to get or not get a 4090 so feel free to reply with your thoughts!

Personally I checked every last one of those boxes, so I can always let you know how it goes later this month.

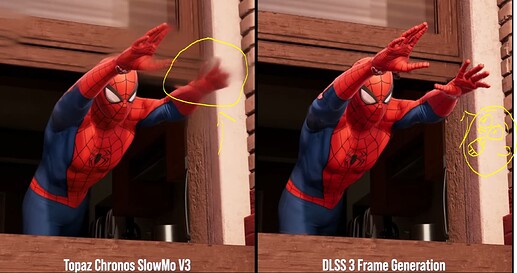

Just because DLSS3 uses AI doesn’t mean it will look arguably better than Asynchronous space warp, quite the opposite actually for reasons inherent in the difference between VR and monitor gaming.

As an avid Digital Foundry fan, I take what DF says with a hefty grain of salt. The Topaz vs DLSS3 image there is slightly disingenuous. If you pixel peep DF’s own footage of DLSS3 in action there are several times where you see identical artifacts to what we see in this image in Topaz.

As usual the artifacts will present most strongly where there is occlusion in the camera’s view. There is one where spiderman is passing a brick wall where he is behind a railing. Part of the railing as well as a huge chunk of the wall texture is just gone in all of the synthetic frames that are generated, because the data is just not there. Same goes with the UI.

ASW in VR by contrast is inherently better because there is simply twice the data (stereo vision), IMU data separate from the cameras, engine integration, and your head always does these unconscious micro movements. At 90-120 fps that physical phenomenon serves as a kind of passive antialiasing, and also feeds the ASW algorithms more data with less occlusion then you could ever get from a simple 2d monitor because there is always a little jitter in the camera from head movement.

Also, VR is more amenable to artificial frame creation because the artifacts are easier to hide when there is that much FOV being covered by the nature of flaws in our eyes. Also, in the VR pipeline, the HMD is always aware of what the game engine is doing. Steam VR, Pimax, Oculus, all have hooks for VR in engine. That is crucial for artifact free motion interpolation.

If you have seen Intel’s XESS, they micro gitter the game camera to feed their algorithm more data for the upscaling.

VR just has that happen as a natural consequence of our movement. What I really don’t like about Nvidia’s approach is that their optical flow generator that is on lovelace silicon doesn’t involve the game engine when its generating its frames.

That is just a bad idea. Nvidia wants the perception of avoidig the CPU bottleneck that an engine would impose and give you the visual of that huge number of frames, but the game engine would be able to feed the optical flow generator the necessary occluded data to avoid those artifacts. It wouldn’t look as impressive in benchmarks but would actually make it better in reality.

IE they are doing it the lazy way so they can wow with big numbers. The optical flow generator is the interpolation chip in your TV but on steroids.

Combine that with the added latency, and the reliance on reflex to make it work, and they are just charging you more money for something that will be sub par and feeding you useless frames.

VR already has better artificial frame rate amplification than DLSS3 by default because it includes the game engine.

If I had DLSS3 on a game like GTA V, and the optical flow generator on lovelace gave me 240 frames, I wouldn’t see any benefit from it. That’s because GTA V’s engine natively tops out and starts acting weird anywhere past 120hz. Since the engine isn’t involved, I would get soap opera effect like artifacts, higher latency, and the game would really only look slightly more temporally stable.