“We believe Intel has zero-to-no chance of catching/ surpassing TSM (AMD partner) … at least for the next half decade, if not … ever,”

Surely if any company could survive and come back from something like that, it would be Intel?

Yeah, I wouldn’t count Intel permanently out. They are huge and sat on a big mound of money. Maybe just for this round.

“Intel said Thursday that its latest manufacturing problems will only delay the 7nm chips by about six months, but the company also said the chips won’t be on sale until late 2022 or early 2023 – at least a year behind schedule.”

For the AMD RX 5700 XT, there isn’t much of a difference in benchmarks.

For disk IO, which people here care less about, the story looks different.

that’s a 25% improvement already, from one of the fastest PCIe 3.0 SSDs to one of the first PCIe 4.0 SSDs.

That’s the trick. They stated that the 3070 is more powerful than the 2080ti for RayTracing benchmarks. It’s very specifically selected.

Many games we play don’t have that. Do you believe and IL-2 or DCS would run quicker on a 3070?

From EVGA regarding upgrade program:

"Current cutoff for products purchased is Wednesday, June 3, 2020 in order to submit a request today. Products purchased on or after this date may be eligible for the Step-Up program.

EVGA Step-Up is currently only available to residents of EU and EEA Countries, Switzerland, the United States (not including outlying territories), and Canada".

Source: http://eu.evga.com/support/stepup/

As England has left the EU, presumably the step-up program no longer applies?

Unfortunately, I don’t think that’s possible. What would work is if nVidia paid developers to add SLI support.

A very good point! I hope it’s just an oversight and they don’t mean to exclude UK. Something to check up on before Sep 17th.

https://forums.evga.com/Stepup-Post-Brexit-m2851390.aspx sounds promising, but I’ll probably email for confirmation

My understanding is that the CUDA cores aren’t “exactly” doubled, but that their 32-bit floating point speed is doubled. That is, each CUDA core can process either 1 64-bit or 2 32-bit operations at a time. Assuming that info is correct, then the rasterized performance should indeed be doubled.

So yes, marketing is double-counting the CUDA cores (as “2x FP32”), but performance-wise, it’s equivalent.

Source: NVIDIA Announces the GeForce RTX 30 Series: Ampere For Gaming, Starting With RTX 3080 & RTX 3090

As for Intel, their strategy seems to be getting out of the hardware business, and going for the cloud/software business. They could make themselves as much of a sore point in the next few years as Microsoft.

But FP32 shading isn’t everything, is it? By the Wikipedia page, the texture fill rate is almost the same for the 3090 RTX as it was for the RTX 2080 Ti. And it’s pretty routine to reduce shading quality settings if they are slowing things down in VR. In fact, we are not really going for eye-candy at all, not even decent lighting with DCS World, where it counts most, what we need is more polygons and more pixels.

I think we need a Pimax DCS World VR benchmark. Since I plan on upgrading anyway, I might as well publish my findings.

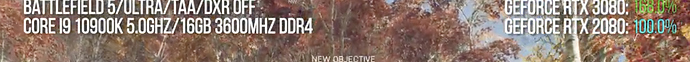

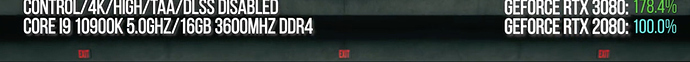

Decent results, assuming raytracing was off. Scaling the ~180% performance from the 3080 vs 2080 to 3090 vs 2080 Ti based on shader units, and again assuming the 3090 and 2080 Ti achieve similar overclock/stock ratios…

(4352/2944)×(10496/8704)=1.78

So, about 80% better. Within the ballpark, pretty good convergence with similar calculations based on other things like transistor count.

But not the >>2x we would be seeing if those CUDA cores really were worth twice their number as NVIDIA claims. And that is before we get into VR resolutions/framerates/supersampling.

I’ll take 80%…

Nevermind, apparently raytracing was indeed enabled. So, lower even those expectations…

Just wait for real reviews everyone. This whole fapping over raytracing is getting really old. DLSS is also something that can skew perceptions, especially in comparisons vs the 10 series.

I just saw the first prices for the 3rd party cards. Asus Strix 3090 is 1800 Euros converted from UK Pounds. So the Founders Edition with a pretty complicated and expensive cooler plus a waterblock is still cheaper than an AIB aircooled card? What are these people smoking?

AIB cards are always more expensive than founders edition, because they are pre-binned.

By AIB do you mean MSI, EVGA, Gigabyte cards, etc? Are they significantly better than founders cards in terms of heat and performance?

To give a brief explanation of silicon binning, wafers come in like a log or sausage shape, and are sliced into wafers.

Those wafers are of highest quality in the middle. The binning process is automated via automatic electrical testing. MFGs can do different things here:

AMD used to make 3 core processors. those were literally poor quality quad cores that had entire portions of the silicon not work. Instead of throwing it away as a failure, they sold 3 core cpus.

Cards that can operate in stability at higher voltages are often put in an ‘overclock’ bin.

I believe at least with the 9 series, 980s, 980 ti and the titan were all the same silicon, just with varying qualities or portions that worked or didn’t.

When you buy an AIB GPU, you aren’t actually garunteed better OC performance. You are garunteed an extremely high quality slab of silicon, and that probably means you can oc more. I think this is less important than some would say. I have a founders edition 1080 that easily OC’s 120mhz core and 500mhz on the memory. MAYBE I could get a 1080 that can do 200 if it was prebinned, but I can say from experience many pre-binned cards OC like garbage.

EDIT: I’m just hoping a manufacturer out there has some design sense, the current offerings are absolutely hideous. Plastic RGB puke, yuck.

Actually, vendors do test their chips for overclockability, set far higher default clocks than even Founders Edition cards (~1950MHz vs ~1500MHz), and presumably ‘bin’ the worse chips by throwing them on the cards with stock default clocks.

Even that high default overclock is lower than what the chips can achieve (~1950MHz vs ~2100MHz), presumably taking into consideration computational error rates that may be very damaging to customers. Like swapping a few random bits in a few tens of terabytes of complex data.

Thus, vendors do guarantee a specified overclock, and are not relying on which wafers went into the chip fab. Quality of the silicon would only be one part of the story anyway. AFAIK, optical depth of field for modern photolithography is less than a fraction of a silicon atom now, so inevitable imperfections in the optical focus, surface polishing, etc, result in chips being different from each other (though not neighboring transistors being different from each other which becomes very important to analog electronics).

Bottom line, when you buy a 1950MHz factory OC card, you should get 1950MHz, far beyond what NVIDIA sells, without even installing ‘overclocking’ software. From there, if you do overclock further, you should get something like 2100MHz, with no voiding of warranties, etc

Can this be done with the chips vendors and NVIDIA sell at lower default clocks? Maybe. That’s more of a gamble.

Calm down…I would wait until real benchmarks land from Gamers Nexus, Jays2Cents etc before I make any decisions.

Im not sure I quite believe yet that a 3070 is more powerful than a 2080Ti at anything other than raytracing yet.

It would be even better to see if @SweViver or @mixedrealityTV can do VR specific benchmarks