Wow, it sounds like it’s going to be more expensive than I was expecting. $1500 for the 16 GB version. Ouch!

pretty crazy I know, but that’s the price you pay for Ferrari performance I guess!

Not having any high end GPU competition really stinks

yes exactly and a proprietary connector priced proprietarily

Where did you get that price information? noting in the article says anything about price.

The linked article links to Tom’s Hardware which has that price, which lists TweakTown as the source.

actually it give a “rumors” price range of $999 to $1499 …so speculation.

It’s all rumors at this point. But those price points make sense (unfortunately). NVidia doesn’t really have any competition at the high end, right now.

all rumors, just like the shipping date of the M1…rumors curse and follow us in this forum

Nvidia have a back stock of more than a million 10 series gpu’s that need to be cleared. Only can i can see 11 series coming out if it it sails above the price bracket of the 10 series. like if they release an 1180 that beats a 1080ti by 30 percent for 30 percent more cash, thats not going to undrcut the ti much. But if they release like an 1170 thats equal to a 1080ti , at even the same price its going to be a real issue to move older stock. Maybe they will release the 1180 and hold back the 70 until it makes monetary sense for them to do so.

i think i will be keeping my 1080 ti (just upgraded form 1070) for a while.

But definitely putting a water cooling on it !!!

Good but unless this proprietary connected is:

A) Used by both Amd & Nvidia

B) Adapts to be able to use current headsets with DP 1.4 or an adapter to be DP 1.4 at the headset End.

Otherwise there trying to scam everyone into new Headsets & no choice in GPU. Nvidia is not known for supporting open Standards. Ie Freesync.

And of course if Wireless works out then the special connector becomes moot.

Hmm higher bandwidth connector? We need faster clocks, more shading units and Gflops to push higher resolutions with acceptable framerate. What gives 4K at 120Hz if we cant maintain the needed frame rate…?

We really need more ROPS (for higher resolutions,) and definitely need higher clocks.

None of that is going to do the industry any favors when these GPUs are so damn expensive.

Yes, but unfortunately even that would not be enough anymore, as the current silicon tech is already at it’s phisical limits or very close to it, just look at how the compute units have doubled in the last generations of the current GPU’s , but still…performance gained only a rough 30-35%, and even increasing bandwidth is not very useful because it is already well over what the chips can move of the data (a simple look at GPU-Z will show it never go over 50% even in most intensive tasks like image processing).

If Nvidia or AMD really want to push for real innovation they need to use the recently declassified military grade tech, or at least using really innovative memory like magneto-resistive (like HP tried to do some years ago with their “The Machine”, only to be forced to “stand down” and change plans…) or even better…in fact, optical chips with optical bus interconnects have been around in some closed circles for almost 20 years now.

The tech is already there my friends…but the problem is almost no one knows it, and don’t ask for it…so the companies keep capitalizing with useless drop-by-drop “innovations” (junk).

Educate yourself, share, then as soon as we will be thousands asking for it…IT WILL HAPPEN IN NO TIME:

Without the bandwidth to drive dual panels it would not matter how much brute force the card has, the bottleneck would be the cable?

R2VR article states:

GTX 11-series, will include a new connector with enough bandwidth to support future VR headsets much higher resolutions and greater refresh rates.

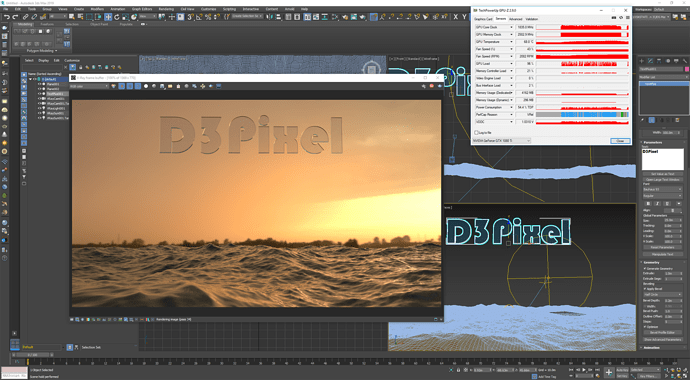

Not true, I render daily on GPU’s and it can certainly max those cuda cores. In fact I will just double check…

Below is the start of a GPU render on a 1080Ti and as you can see at this snapshot in time it was at 96% load. It stays between 90 and 99% for the duration of that rendering.

If it is not maxing your GPU then it is the software that is not designed to. We have this discussion in render engine forums quite a lot as people add up to 8 x GPU’s and want a 100% increase in render performance per card added. But most software is not optimized to max a GPU if you only have one as it would grind your windows usability to a halt. The mouse would not move etc.

The above render was done on a different GPU to the one I use for the system. If I enable the system card too then I can hardly use the machine while it renders so maybe this is what you have seen.

That one is the Gpu occupation (CUDA Cores) not the memory bandwidth we were talking about (Memory controller load) that as you can see stays at roughly 21% during the rendering process, and in any case no computer game uses so many resources like a 3D Render or Image processor. ![]()

Ahh ok I missed what you meant.

Even so, what has the memory controller load or memory bandwidth got to do with the bandwidth bottlenecks of GPU —> Display?

http://www.tomshardware.co.uk/answers/id-2835229/memory-controller-load-gpu.html

Also, plenty of real-time games can max the GPU load (it does not have to be pure cuda). Thats why games have frame-limiters in them these days to avoid unnecessarily running flat out or above your display Hz.

Admittingly there are a lot of technical aspects of the GPU and various bandwidth’s I do not understand.

In short, makes no difference if they add more bandwidth to memory, data buses, double the CUDA cores, you’re going to gain only a small performance boost as the current bottlenecks of silicon chip technology (not only Gpu’s) are intrinsically slowing down the whole system because some limitations of electric current signals and heat dissipation, that need to keep to a certain level to avoid errors in the computations.

Soon this will become evident as VR is pushing the limits way more ahead to what silicon chips can do, and there are already viable alternatives.