So currently i am still having bad performance with a Vega 64, where 1080/1070 users report better performance than mine.

Also the major offenders are 72hz and 64hz still not working plus Smart Smoothing producing a lot of artifacts both in 90 and 120hz mode.

Do i have to buy a nvidia gpu if i want to play with this headset?

We do have a lot of AMD in the lab - here’s a photo of another test card we added.

Thing is there are driver issues on AMD at the moment that negatively effect the experience. SteamVR recently patched a lot of them (you can analyze the SteamVR patch notes to see some of it) so hopefully we can build on that (and the improvements AMD is making to the driver itself) and make more progress with AMD cards.

That said if you are looking for the most “consistent” VR experience right now then Nvidia is indeed the way to go.

Indeed pimax needs to figure out why Amd is not having issues with other vr headsets. This has been a known issue with Vega since the p4k where pimax had to make a special firmware.

It is in Amd’s interest to help pimax resolve this especially with the 8kX release.

On the plus side with New cryengine demo proves Retracing doesn’t need special cores.

Check youtube video & comments. The one fellow Darthtux explains ray tracing as far back as Amiga.

Well actually i had a performance regression with new steamvr/newdriver/newOS/newpitool.

SteamVR itself in the video settings suggests 30% instead of the previous 32%…

I understand that your company is small and has to focus on the majority of the market but like backers you could “stretch goal” and give AMD a little bit of love.

For example i’d be glad to atleast have proper smart smoothing support without tearing so i could atleast trade in bit of input lag for smoothness to have a good experience.

Currently the headset is mostly sitting on the shelf after a quick “open the game, check fpsVR, close” while i am costantly lurking the forums

I wouldn’t have bought the headset if you specifically stated “AMD cards have worse performance and support than their Nvidia equivalent” instead of writing in the requirements “Nvidia 1070 and AMD equivalent or above”.

Just read the steamvr patch notes over the past couple of months… A lot of the problems they are correcting are rather fundamental.

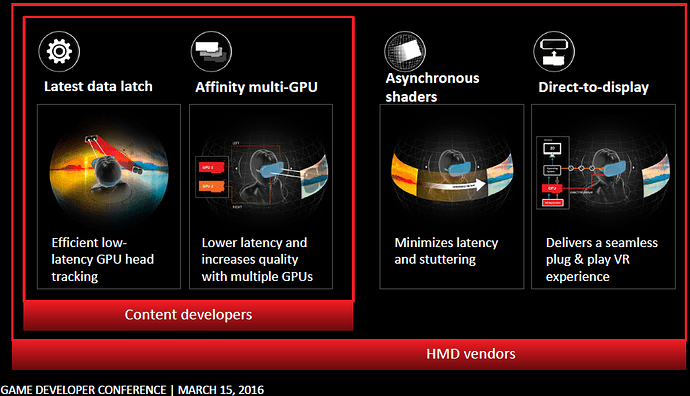

That‘s only one end of the chain. With or without fixes of AMD there is more performance degradation than with other headsets in SteamVR or Oculus. Just pointing the finger at AMD is not enough. Pimax has to collaborate with AMD to implement LiquidVR and optimize for GCN and RDNA just as well.

All the SteamVR AMD fixes were for MotionSmoothig that made it into StamVR in May 2019. I think key is to have it really used properly in Pitool for Vega, Navi and Polaris. Another thing that might cause degradation is when async compute is not working properly like with the SteamVR compositor that happened in the past.

If Pimax knows the root cause for insufficient performance they should tell AMD but also put it in the Pitool release notes.

Of course steam has had to correct some of there issues with Amd & pimax as well. The real curiosity is that older cards fare much better as was also true in the p4k days suggesting like Nvidia Amd did some major changes that affects pimax interactions with graphics driver. From our own conversations it is disappointing that the 2 sides of this issue has not been resolved. With over a year.

Is there really a noticable degradation in performance on Oculus, SteamVR or Windows MR headsets related to AMD GPUs? I know that AMD GPUs have a lower raw performance in general, but I have not read or heard about specific problems related to the hardware.

The impression is bigger performance delta on Pimax compared to performance delta that is/was achieved on other SteamVR & Oculus usage relative to Nvidia cards. But this is not apples to apples anyways because Pimax has a way different resolution etc.

The point rather is @PimaxVR may not sit still waiting for AMD and SteamVR to nail out their stuff and assume that would be sufficient.

Pimax has to go the extra mile as well to improve performance with AMD drivers.

The xperience is atm that when sth does not perform good enough the system just renders fine without letting us know that there is a unused capability of SmartSmothing or other feat. like Direct-to-Display… So the user doesn’t know whether to point at the games developer or at Pimax to improve or fix things…

It’s not about are AMD users left in the cold vs Nvidia users. It’s about putting effort in getting the best possible result with all the feasible GPUs, same also with Intel Xe in future IMHO.

Truthfully this is old with p4k (1440p upscaled to 4k 60hz) & on the old B1 (1440p oled 60hz)

So is more related to pimax then high res. But agreed these 2 need to find resolution as we go with the above this issue is actually just over 2 years old or more; first reported with Vega release.

I am not talking about Pimax. I am asking if, when running the same Oculus game (on Oculus) using an AMD GPU, there is a noticeable performance difference, compared to when I run the same game on an Nvidia GPU, which would otherwise be considered equivalent in raw power to the AMD GPU?

I’m only talking about performance related to Pimax. That’s what this thread is about. Pitool vs Oculus or Steam/HTCVive. AMD users got the impression that their otherwise experienced speed doesn’t compare as good what was the relative result was with other HMD runtimes, me included. The reference is experience with Pi4k or other HMDs.

As noted in the SteamVR release notes MotionSmoothing gets disabled automatically when a frame takes too long until the next SteamVR restart. Hickups in the pipe with AMD drivers and GCN async compute could easily cause such things I assume. That is a documented issue, we can expect that SteamVR just operates the same on undocumented issues.

But then PimaxUSA should not push ppl to buy just Nvidia for smooth experience as he did here, it should be PimaxVR to fix percularities for all GPUs and drivers, not just the ones with the biggest market share…

For Oculus I think they did it in an acceptable way. The Nividia optimizations came usually 1-2 mths earlier in beta but AMD user base was not left behind for months…

Both are relative as it was also attempted to use the idea other non pimax headsets have issues. Which is not really the case as shown in benchmarks.

Hp Reverb for example has a similar close res as our current released headsets.

But as we know Vega Cards have had issues since release with Pimax starting with the p4k & B1 which required a special firmware & an older Crimson driver. So this pimax specific issue is not new & if needed pimax may need to push a new firmware for p4k customers if it can’t be fixed in the driver alone. Especially since during 2018 they were clearing these headsets no new customers at the time.

I heard GTX 3000 series is coming this Summer. Can’t wait!!

Well actually my v64 gpu is on paper a much more powerful gpu than a 1080 and this reflects in low level api such as dx12/vulkan… on dinosaur api such as dx11 sometimes it loses to 1080 but in 2020 almost driver are so matured that they are basically the same if not better (vega)

I had no performance issue on my oculus rift, is pimax5k specifically that has a huge perf regression.

That combined with the trash support of smartsmoothing and you have a recipe for a trash experience.

I think pimax must optimize eventually for GCN/RDNA if they don’t want to be cut of from the next console market.

TLDR: at this point GCN architecture and driver is old (5+ years) and so so matured that pointing finger at AMD drivers (which also are proven to be more stable than NVIDIA btw) looks ridicolous even to someone that doesn’t develop software. The only headset i have problems with is Pimax, other headset had the expected equivalent nvidia performance and not 20-30 fps less.

That could be true. Save that Amd has also admitted there is an issue with their driver & hence why both are indeed working on the issue. But yes both need to get this sped up. (Amd inside source).

But yes with the proper setup & support for more open architecture Amd is better for trying to move things forward. Darthtux in the youtube comments explains Nvidia architecture really well on Ray tracing capabilities & why Amd cards kill GTX cards in ray tracing without extra chips to do so.

As far as I know pimax isn’t developing for consoles. Or are you saying ps5 & next xbox are going to be compatible with pc headsets in general?

OpenXR could save a lot of headaches if every VR company finally and officially adopt and support it, but it’s adoption is currently too slow and only Oculus added just an initial support for it.

Pimax too should start making plans for its early adoption and not just hang on Nvidia for driver compatibility and ready to use extra VR features, relying upon them, or wait until the last minute for Valve to eventually adopt or support it, and end up left behind to follow in a hurry…

I don’t know what prices are like in the US, but here in the UK the 2060 is about £50 cheaper than a 5700XT. Crytek’s implementation is barely playable on 5700XT/2060 and doesn’t use the RTX cores on the RTX cards at all, so with an implementation that offloads the raytracing to the RTX cores the RTX cards would romp away from the 5700XT.

Crytek’s demo is deliberately tying one arm behind nvidia’s back just to implement a very basic and artefact-y version of “raytracing” which is just basically a modification of the GI implementation - its barely playable with low object counts at 1080p and stuttering rubbish at 1440p on anything less than an RTX card, so why then penalise the RTX cards by not implementing an actual RT API.

No need to implement rtx “cores” and I think you need to read up on the article. & the youtube comments Darthtux gives a very detailed info on RTX cores & Raytracing.

Will be more interesting to see more benches as Radeon Rays has been available for a very long time at least as far back as Fury.

So you’d rather call it a fair comparison when half the GPU’s on that test have a third of their surface area doing absolutely nothing when they could be accelerating the very thing the demo purports to be testing for.