Hmm… I’m not sure if that’s true though. The pimax renderer would ‘just’ need to know the specifics of the lens and how to transform the 6 viewports into 1 distorted picture that has taken the lens specifics into account, right ? I don’t see why this would break SteamVR compatibility ?

the projection is different when the eye position is different, if a projected image is generated when your eye is very close to the screen, and then you look at it at very far distance, then of course you will see the image incorrect. you will see the image correct only when you eye position is the same as the image projection use.

It is the core issue. A lower field of view would fix it.

Or multiple viewports could be rendered which would increase render requirements by 20 to 30% if they used lens matched shading and SMP.

Its a fixable problem, but they have to want to fix it.

I still don’t buy fruit ninja, so I don’t know how much fov which be using for new render.

But I try to capture from video to compare “110” with “200”

I am not sure why the left and right is so much stretched. I think that the lens of pimax will expand the center of area to be bigger and reduce the stretched of left and right.

So I wait to see the video that how the lens could make us to see the good scale (or may be fix by software).

I hope that the final product can make the best view. encourage. (Still be okay which knowing that pimax team can get the native fov, not only fixed degree).

Thanks, thats showing very nicely how much it is stretched on the edges.

Damn, I hadnt even thought about it. But your eyes actually move indeed ![]() This complicates things indeed. To render perfectly, you’d actually NEED eye tracking and render according to the position of the eye.

This complicates things indeed. To render perfectly, you’d actually NEED eye tracking and render according to the position of the eye.

Sorry, I find it very hard to understand your english, so I’m not fully sure what you said? The image I showed is what the Pimax would show on one eye, not both eyes obviously.

Thats the whole problem @yangjiudan The Tested crew noticed warping WHEN MOVING THEIR EYES.

As long as they looked dead ahead in front of them, they didn’t notice distortion. But, if they moved their eyes to the periphary, they immidiately noticed warping. THATS A HUGE PROBLEM. You have to either lower the FOV to 150, or 120, or render multiple view ports. You cannot count on a fixed eye position.

You don’t need eye tracking. When you have a correct image like the right image here then you can look anywhere and it will look correctly.

Eye tracking would help a bit if you have the wrong projection (the left one), but if you would move your eyes then everything would warp around strangely in the world, so its absolutely not practical. The only solution is rendering an image thats correct everywhere, like the right image in that comparison.

sorry for my poor expression.

What I mean is the image for the VR HMD is will not look correctly when you see it in the computer.

because VR HMD is very close to your eyes, and the computer screen is much more far. and the correct projection is not same for VR HMD and computer screen.

But if the position of the ‘spectator’ (the eye) moves then the projection would need to change too.

Yeah exactly, because of the distance to the computer screen, the position of the eye doesn’t matter much.

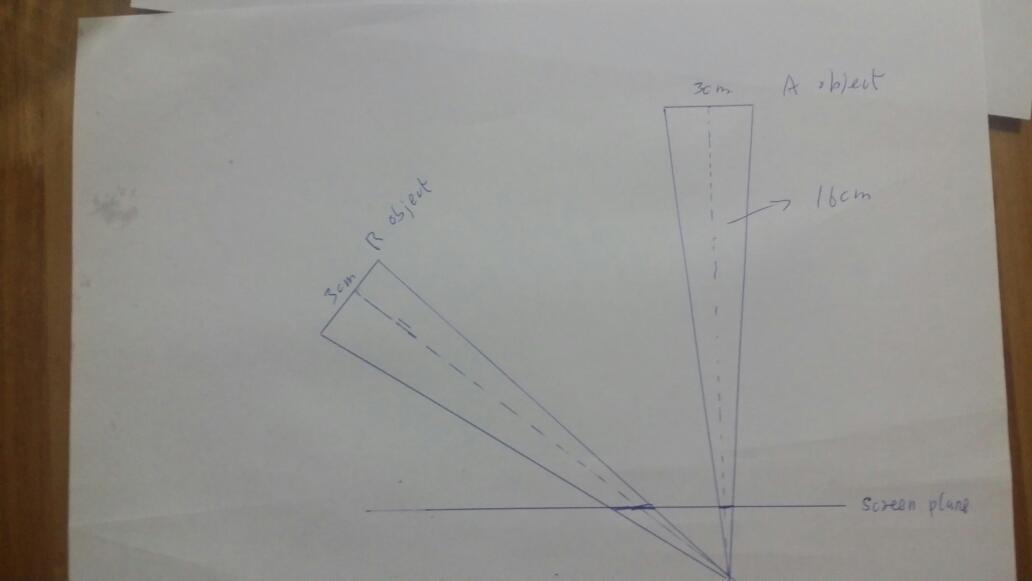

I will post he “stretch” explanation here again.

an I will draw you another pic to show you why you can not sit in front of your PC computer to judge the image for the HMD is not correct.

there is no stretched in any stage of the rendering process.

so the image is not stretched.

the game virtual world is a 3d world, and the screen is 2d plane, to show the virtual world on the screen from the user’s eye position and orientation, a perspective projection will be needed, and all 3d apps will do this.projection to display on 2d screen.

the image bellow is a simple demonstration:

you can see that objects with the same size located in different position in 3d virtual world will have different size on the projected plane(screen),

@yangjiudan so … then if you move your eyes to the left and look to the edge of the screen, the projection is actually wrong because the engine doesn’t know the position of your eye. Is that correct ?

It shouldnt complicate things if your eye moves.

This is very bad, it means the Pimax team is cheaping out on their rendering.

You cannot make a wide FOV HMD and then expect someone’s eyes to maintain a fixed position. Your eyes do natural sacade movements every second.

Rendering one view port per eye is cheating. If they do this, people will get eye fatigue and sim sickness after prolonged usage.

PLEASE @deletedpimaxrep1 @PimaxVR LOWER THE FOV IF YOU ARE ONLY RENDERING ONE VIEW PORT.

Bingo, yes. That is what he is sayibg lol.

If it can be corrected with eye tracking then all I care about is how long it will take for the eye tracking to be implemented and when the module will be made available. Also sounds like they need to include the eye tracking module as a standard inclusion for everyone.

But why then not just change the viewport according to the eye position ? No need to render more than one viewport ?

of course the engine know the position of eye.

Are you using eye tracking then in the prototype already ?