I hope they provide a walk through for proper eye-to-lens adjustment. Even if dynamic distortion algorithm isn’t a thing, hopefully eye tracking is there enough that the software can tell us where our eyes should be in relation to the lenses and how to adjust everything manually.

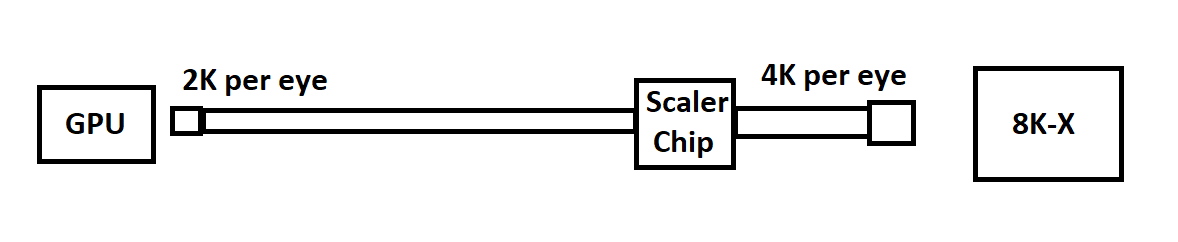

Are you suggesting something like this?

That seems somewhat reasonable. You would basically have a scaler dongle hanging off the headset. Then you could just take the scaler out and play in ACTUAL 4K per eye once you had a powerful enough GPU.

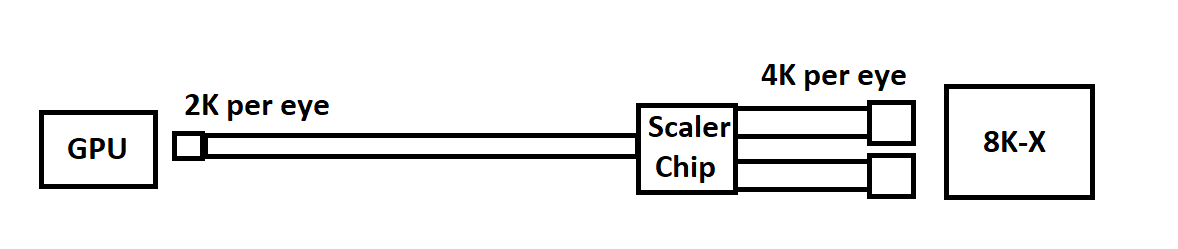

Don’t forget, though, that Pimax was is limited by cable standards (DP 1.4) and that they would actually need 2x DP 1.4 connections on the headset. It would end up looking closer to this.

This seems like a reasonable way to make the 8K-X. Just have the scaler dongle as a temporary measure. It would also be WAY less problematic if there were issues with the dongle than if there were issues with a built-in scaler.

Actually, no, but I can see how what I wrote could be read that way.

What I was getting at is that the “4k” screens in the 8k are not truly 4k ones: The first pixel has only red and green subpixels, and the second only blue, and it continues to alternate like this for the entire line. Even lines are the same, only now the only-blue subpixel comes first, and then the one with the red-green pair, so that the two kinds are distributed in a checkerboard pattern, across the screen.

(An alternative way to look at it, is that the panels are full RGB, but they are not proper 4K resolution with a 1:1 pixel aspect ratio (3840 × 2160), but only 3840 × 1080 with 1:2 (twice the height) pixels, with every second column offset half a (tall) pixel vertically)

Anyway, this means that if I send a full 4k picture to the screen, a full half of the RGB values will simply be thrown away, because the screen does not have the subpixels to show them.

You could play into this weakness, by removing all the unused subpixels from the bit stream on the sending end, effectively packing two of those incomplete pixels into one complete, halving the amount of data that needs to be transferred, but still containing every bit that the so-called 4k screen is capable of displaying, in a 2k bitmap, which is then restored at the receiving end, expanding the stream to the 4k one the screen expects, by inserting null (or any value for that matter), in the places where the unused values would go, so a finished true 4k image on the computer, after rendering and distortion, something like: RGx xxB RGx xxB, etc. Take away all the “x”:es. Send the resulting RGB RGB image over DP, and let the HMD put something - anything, in the places the “x”:es used to be (EDIT: so back to RGx xxB RGx xxB, which, again, is what the screen has subpixels for) - there is nothing there to make the x values show up anyway.

(EDIT2: I am figuring this would make the regular 8k look much better - doing away with the scaler, and its softening effect on the image, and utilising the full resolution of the screen - no need to downsample the transferred image to 1440p.) (EDIT3: …also a much simpler operation than the scaling – the fact the the scaler is an off-the-shelf component notwithstanding :7.)