Bloody AMD fanboys!!! get off our land…

signed

Nvidia fanboy!

Bloody AMD fanboys!!! get off our land…

signed

Nvidia fanboy!

Navi 20 could be a game changer.

Navi 12 will be, because it will drop the price of 1080 performance to $250 (with only 56 compute units and less than Vega 64 which is surprising)

Navi 20 will be around 1080ti performance, but if AMD manages to have older single core gpu software take advantage of their chiplet design then it will be an even bigger boost.

I wouldn’t hold out on it for older games, I would expect AMD’s newer architecture to work better on newer games and on older games the 1080ti/2080ti will always be the best card, especially if Nvidias next cards move away from Rasterization support and dedicate less of their architecture towards it and more for Ray-tracing (RTX specialised cards gimped for older games)

As far as I see though Navi 12 is being designed with the next-gen consoles in mind, not PC users. the PC “gaming” chip is really Navi 20.

It’s also easy to supersample gen1 headaers. It’s not just pixels but all the extra details the increase in screen realstate.

Due to excessive reflections spinning us this way and that we can not see where the RTX OFF border is. Sorry in advance.

![]()

That’s also due to not properly utilizing the gpu tech. Just readup on amd’s infinity fabric.

Baseline dude. The 5k+ gives a baseline. Lol

Funny how most people usually buy their GPUs for future-proofing. Come next generation when we see the benchmarks we may see people buying last generations top tier GPU’s for past-proofing and gaming systems being built to play pre ray tracing games because the newer cards may not work as well on the older software.

If that’s not an incentive to buy a 1080ti or a 2080ti I don’t know what is, but I guess only time will tell… and the 3080ti and Navi20 benchmarks.

It is certainly interesting to be sure and actually quite fun watching. I always remembered AMD as, errr, crap. Blue screens here there and everywhere so I stuck with Intel but then all these videos have started to appear about Intel shady practices and how they tied AMD up in court (at huge financial loss) while they monopolized the market using dirty tactics. Now, I detest bullies and so for the first time find I like the steps AMD are taking and kinda enjoying watching Intel take a face full of karma. They deserve it. NVidia? Not so much. My 10 series GPU’s have served me well so far.

As to Navi, I know very little other than reading articles on rockpapershotgun. I get the impression that AMD concentrated on the low/mid end with more effort into power and heat efficiency (and console and Apple) in the past so wree spread quite thin when it comes to PC users but now I hope their performance range is coming with Zen 2 (5Ghz all core?) and of course 7nm Navi. If NVidia really has hit a raw performance wall then AMD can finally catch up…maybe, hopefully. ![]() You see, as to Turing and the tensor cores, I am not convinced on those alone, they make things pretty yes and in my field (3D Animator) that can be very useful for video rendering but for gamers? Hmmm, that needs grunt not eye-candy, VR more so.

You see, as to Turing and the tensor cores, I am not convinced on those alone, they make things pretty yes and in my field (3D Animator) that can be very useful for video rendering but for gamers? Hmmm, that needs grunt not eye-candy, VR more so.

I have a feeling that NVidia had the whole GIGA RAYS marketing package as a fall back option (10 years in development too) if they could not deliver on 20 series grunt alone so a 30 series will again be something odd, I will not expect much more raw power though.

I have just read that PC Vega was very under staffed as AMD used almost two thirds of its engineers on PS5 and Apple so Vega got the back burner. I think the first 7nm tech launch will be Navi 10? Sure I read that earlier. Anyway, thanks for you posts, quite educating and have me off researching bits of what you say.

Nvidia is no better than Intel when it comes to dodgy court tactics, bullshit lawyers, but mostly anti-consumer is gimping their previous GPU cards performance with newer drivers and gimping AMD cards from using Hairworks software. Shame their stuff and intels stuff works better, and at the moment there’s no alternative but to use their hardware. If that changes I’m jumping ship.

No doubt AMD Zen 2 has a chance at beating the 9900k marginally in games when it comes out if it has a stock clock speed of 5GHZ, which is possible considering its at 4.5 now. AMD has always been the underdog between Nvidia and Intel, they market on being second best, although that may change soon.

Turing ray-tracing isn’t even proper ray tracing, it’s only ray traced shadows or reflections. In it’s current implementation it’s hybrid rendering of rasterization and ray tracing to achieve the full scene.

This video explains what I mean in full detail

Thanks, I will watch the NVidia video in a moment as have seen the others. One thing that I forgot to ask, you mention earlier about single core GPU in older games but as GPU’s have shader units / cuda cores in the thousands, what do you mean by 1 or 2 gpu cores?

I asked what difference does the 10hz make on the 8k-X, he didn’t even answer that question at all and just started going on about 5k vs 8k-x which has nothing to do with what you said and I responded to.

Yeah you’re right, they do have thousands of cores, but Titan cards had more than one die and the cores GPUs have are mostly virtual cores. The second/additional dies don’t get used in games which are coded in software only to utilise one monolithic gpu die.

Same way old games are coded to only use one or two physical cores on your CPU, and the rest of your cores go unused and are wasted performance… i think it may impact the performance of a Navi 20 chip because of its chiplet design.

Nvidia CUDA cores are virtual cores, and so are ATI Stream processors.

Vega 64 and vega 56 have 64 and 56 compute units respectively which generate the stream processors (virtual processor cores) These compute units are physical cores but on the one die.

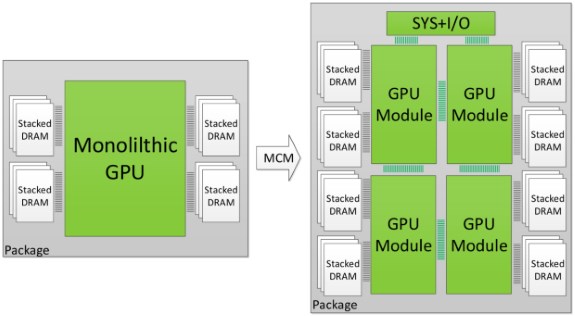

Old games optimised for GPU processor dies with the architecture seen on the left. (monolithic die)

AMD’s new Navi 12/20 GPU architecture is using this chiplet design on the right, which isn’t multi die design seen in the past on Titan cards which doesn’t give additional performance in games, rather it solders additional GPU dies together using Infinity fabric, so it is to be seen as to whether or not older games will need optimisation to take advantage of the chiplet design in order to see performance gains more than a monolithic gpu design 1080ti or 2080ti.

If the AMD chiplet GPU design doesn’t see improved performance in older games, the performance will be equivalent to the single core performance and clock speed of the first of the four modules. so only one quarter may work in older games but that’s yet to be seen if they managed a workaround to have the extra dies utilised.

Simple truths here

8k-X is a prototype release. Specs are not guaranteed. It’s release will likely be Dp 1.4 as no Bridgechips as of yet have Dp 1.5 & neither do any current gpus (unless it is simply firmware upgradable).

How will DP 1.5 affect VrLink which current spec special UsB-C with Dp 1.4 & power built in?

Amd already has opensource realtime Ray tracing in Radeon Rays. Not currently as far as I know in current desktop cards (pointless for VR too much perf hit)

The simple truth if the 8k-X releases wiithin 6 months it will be based on what is out & available. We may see the new Analogix Bridgechips.

Perhaps the consumer release of the 8k-X will have the new bling that is off in the distant horizon.

2080ti has enough trouble running the 5k+ & 8k. StarVR One even demonstrates 5k req is high as they lowered resolution & still left in an option for dual inputs.

20Nvidia series is a cashgrab bandaid.

Intel also put screws to Nvidia when they wanted to develop there own x86 cpus.

You need to use a baseline to compare. What have we discovered? 80 to 90 no real difference. But we knew this from Oculus dk2 users & Oculus dropping 90 for 72 in the Go.

Things are changing & there is even enough articles on more refresh is not always better.

Simple truth when it is ready we will know more not before. Some 5k users have expressed having an 80hz option.

Well I know cf does yield some interesting benefits.

My old setup had 2x7950 3g on the p4k.

Single gpu vr crashed & would not run. SteamVR perf test for 1 7950 was Red 1. 2 in Crossfire Average of 3.4 yellow odd green spike of 6.0.

Everything ran & did so well for Med VR. Too bad Nvidia is really restrictive on allowing Sli. But Nvidia doesn’t like easy upgrades or supporting open standards. Hence why they don’t support freesync etc.

Wire thickness isn’t the limiting factor for bandwidth, it is mostly needed for power transmission. The limiting factors are length and number of conductors.

Your options for transmitting more data (ie pixels) are to transmit faster (higher frequency) or to use more conductors. The problem with transmitting faster is that you have to account for EMI (Electro-Magnetic Interference) and the main ways to deal with that are limiting current and reducing cable length. The problem with using more conductors is that the extra wires are expensive, the cables would be heavier, and you have to keep them all time-synced.

Optical cables solve a lot of these issues but have more of their own. You don’t really have to worry about EMI and can make your cables longer. However, optical cables are relatively fragile (no the best fit for roomscale). They are also unable to provide the electrical power needed by the headset.

If cf’s new iteration xGMI sees stacked 200% improvement gains per additional gpu and more than 2 GPU multi, I would go x4 Navi 12 $250 GPUs just to spite nvidia’s anti consumer tactics and also for the lols.

Having thought about it I think my point really is that the 8k+ is more feasible headset to run at full refresh rate than the 8KX, seeing as it would have an improved full rgb 4k screen to the 8k’s pentile screen but still retain the upscaler of the 8k that the 8KX doesnt have, therefore an 8k+ would be of the same performance as the 8k.

Ahhhh ok, now I am with you when you said performance may drop, as each gpu die is a sum of the whole? But all this hinges on how many instructions can run per clock cycle? If it is just one then it will be like multi-threading which has its downsides but if AMD manage to run 4 instructions in parallel somehow then it should be a beast. Maybe the first GPU is more powerful for non sequential instructions and the others are cut down versions so performance will not be hit too hard in backwards compatibility but in modern games the instruction set will be heavily paralleled. I am flying at the edge of my capability here with what you are saying and some research but it is making some kind of sense…ish ![]() now.

now.

Now I understand a bit better why Intel released a 8c/8t CPU.